go-crawler

A Web Crawler written in Go. This was developed with the purpose of me trying to learn to write idiomatic Go

The crawler is limited to one domain - Given domain http://www.example.com it will crawl all pages within the domain, but will not follow external links.

The crawler will also provide link information between the pages of the crawled domain. For example, given a link from http://example.com/ to http://example.com/about/ and another link from http://example.com/about/ to http://example.com/ the output will be:

http://example.com/

http://example.com/about/

http://example.com/

Installation

To install go-crawler, you'll need to have Golang installed and environment variable $GOPATH appropriately set.

$ go get github.com/antoniou/go-crawler

Usage

$ go-crawler -o tom_sitemap.out http://tomblomfield.com

To see what happens during crawling, enable verbose mode:

$ go-crawler --verbose -o tom_sitemap.out http://tomblomfield.com

Assumptions

Certain assumptions about the requirements should be made:

- The crawler does not crawl subdomains of the specific domain provided.

- If a specific path within a domain is given as a seed URL ( e.g http://tomblomfield.com/about), then crawling will only happen within that path.

- Crawling happens only for a specific scheme (e.g, http or https, not both)

Architecture

The solution goes through two major phases:

- Crawling the site and creating an in-memory representation of a sitemap.

- Exporting the sitemap to a file representation.

Crawling the site

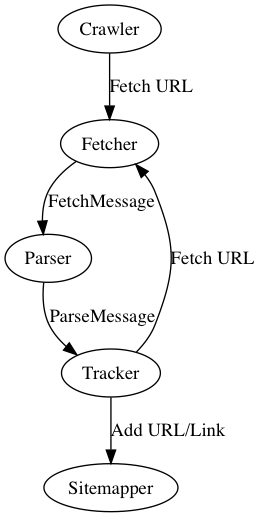

Crawling happens with asynchronous channel-based communication between the following components:

- Fetcher: Awaits for requests to fetch pages, and hands over responses to the requests to the Parser

- Parser: Awaits for http responses (from Fetcher), parses the responses and hands over URLs that are found to the Tracker

- Tracker: Awaits for URLs that have been found (from Parser) and checks whether the URLs have been already crawled. If not, the Tracker hands over new requests to the fetcher.

- Sitemapper: Holds the sitemap representation and awaits to receive new nodes and edges to add to the sitemap (from the Tracker)

The Crawler is the orchestrating component that starts all the workers and awaits for all the workers to be in "WAITING" state, to detect that the work has finished.

Exporting the sitemap

After crawling the site, the sitemap is exported using an Exported. The default Exporter outputs the sitemap to a file using an indented structure to represent the links between pages.

The Crawler initiates the export by creating a new Exporter. The exporter then queries the Sitemapper to walk through the Graph

Average Space Complexity :

The solution makes use of bloom filters, graphs and hashmaps:

Given N crawled pages and M links between the pages, each of average size L, their space complexity is:

- Bloom Filters used for pages: O(1) - fixed Space

- HashMap used for pages: O(N)

- Graph Nodes used for pages: O(N)

- Graph Edges used for links: O(M)

- Storing the pages to be parsed: O(L), since the current Parser implementation parses one page at a time.

Therefore, the average space complexity is linear to the maximum of pages and links between them:

O(N + M + L)

At the moment there are 4 asynchronous workers that handle the crawling. Components are working in parallel and are communicating asynchronously, however each component is currently single threaded.

To improve performance, we can have multiple workers of each type waiting to receive and process work. To achieve this, we'd need to extract the channels from each individual component, so that each channel can have multiple receivers.

When crawling large documents, performance can be improved by chunking the document between several Parsers.

We can also take advantage of files like robots.txt and the provided website sitemap as hints to the website structure.

Future Work/Improvements:

- Parallelize implementation even further as described in Performance

- Bloom Filter False-positive Rate: We make use of Bloom Filters to detect whether a page has been crawled or not. If the size of the bloom filters is not adequately large, it can have a false positive response. To deal with this, we'd have to create an adequately large filter.

- Improve coverage of unit tests.

- At the moment, a site is completely crawled into a Sitemap in memory and then exported/shown. This will not work for large websites whose sitemap might not even fit in memory.

- At the moment, the crawler limits itself within a single scheme (e.g, http). For example, if http://www.example.com is given as input, the crawler will not follow links to https://www.example.com/about. This is an improvement that the crawler needs.

- Queries (e.g, ?account=21312) and Fragments (#About) are not removed, hence a page can be crawled multiple times because of them.